Electronic Decoding Diagnostic Inventory (EDDI)

UT College of Education

For this project, I worked with Dr. Nathan Clemens in the Department of Special Education in University of Texas at Austin to design a diagnostic assessment of individual students’ difficulties in reading words. Specifically, we designed a software that would provide in-depth diagnostic data on an individual students’ skills in reading words, including the letter sounds, word types, and spelling patterns in order to help instructors understand students' weakness in specific category and keep track on their learning progress.

Problem:

Instructors' current pain points are inefficient data storage and performance tracking. For most of the instructors, they either stored data in paper-based files or manually typed spreadsheets. It is difficult to gauge whether there is improvement in students' performance in the long term. This is a tedious process and it is easy to make errors when having hundereds of students on hand. The information from the assessment could be used by a teacher to better design instructions that specifically targets a student’s reading needs.

Methodology:

Research, Subject matter expert (SME) interviews, 1-1 interviews, Qualitative data analysis

Process:

First of all, I created interview scripts and moderated semi-structured interviews.

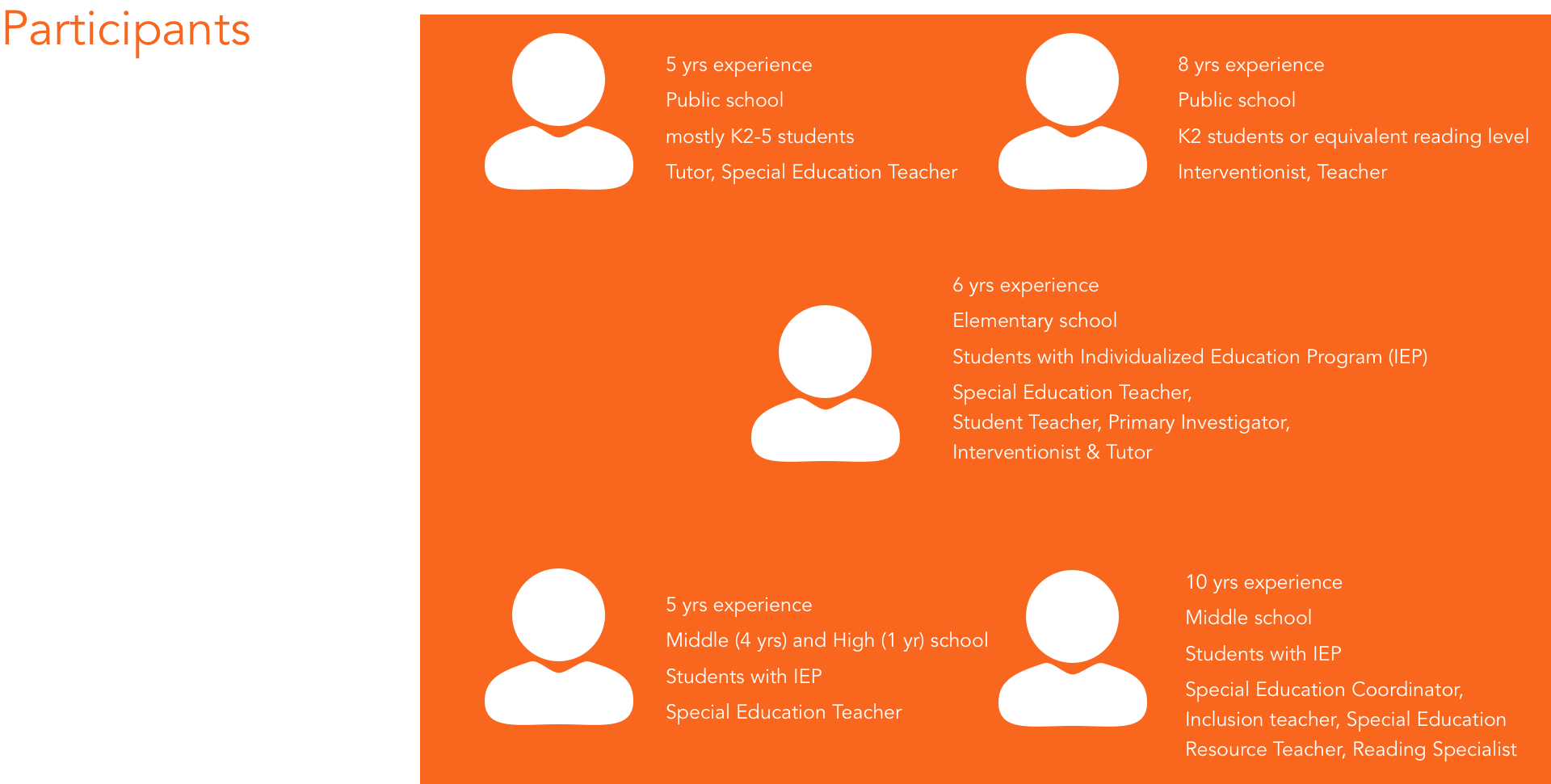

The interview script is separated into three parts, first starting from grasping demographic information about the teachers. This enabled us to look at their suggestions, preferences, etc. by demographic differences to see if there are any correlations (i.e. preference based on years of experience, age group they work with). It could be a great reference for finding patterns or data trends during analysis.

The second part served to extend the conversation from the first section. I wanted to know how instructors assess students' reading ability currently and what they found useful or useless. This section helped me understand how to increase the adoption of the app by leveraging the work model that instructors already have.

For the last section, I wanted to get their feedback on a concept on the assessment tool we made. First, I explained the assessment scenario. Each assessment was conducted with a student on a 1:1 basis. The student read lists of words from a tablet. On a separate device, the instructor scored each word according to whether it was read correctly or not. Immediately after the assessment, the software summarized the data in terms of the student’s accuracy in reading various word types and spelling patterns.

Tools:

Sketch, Figma

What I learned:

- Use in-depth qualitative data to inform design principle and reiterate the product

- Collaborate closely with designer to implement design changes based on topline findings

- Create interview scripts and design assessment test according to research question

- Maintain communication with stakeholder, especially regarding blockers in the recruiting process, timeline changes, and project expectations

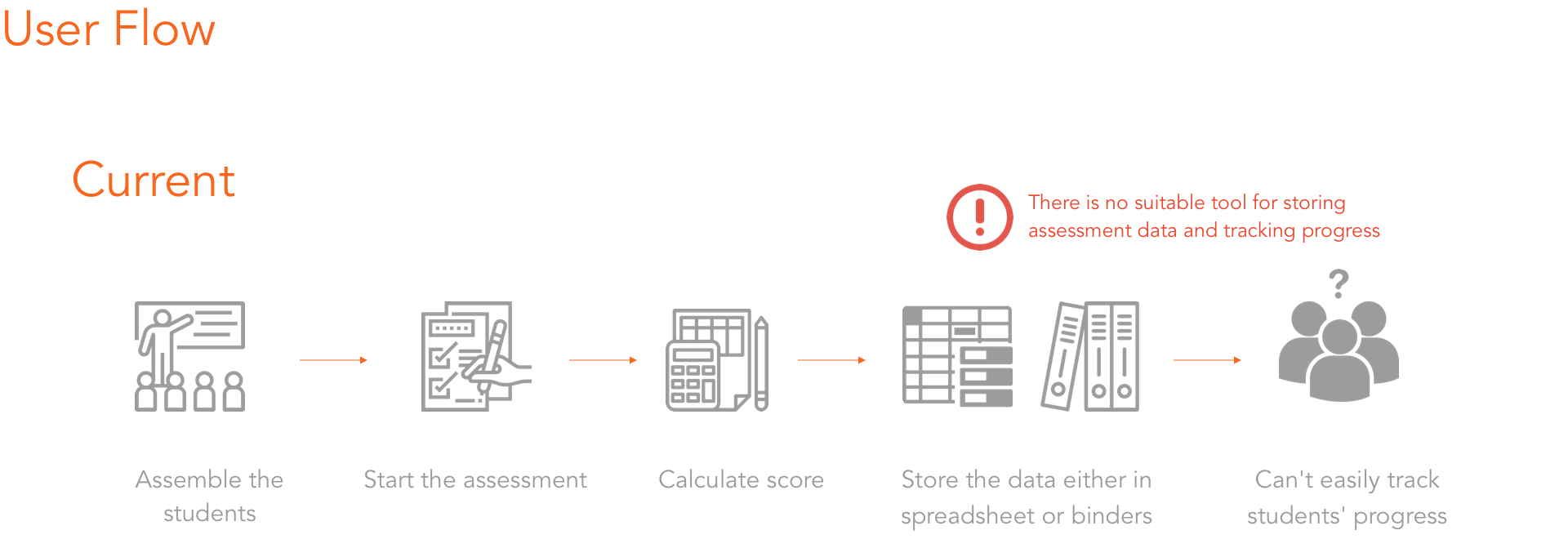

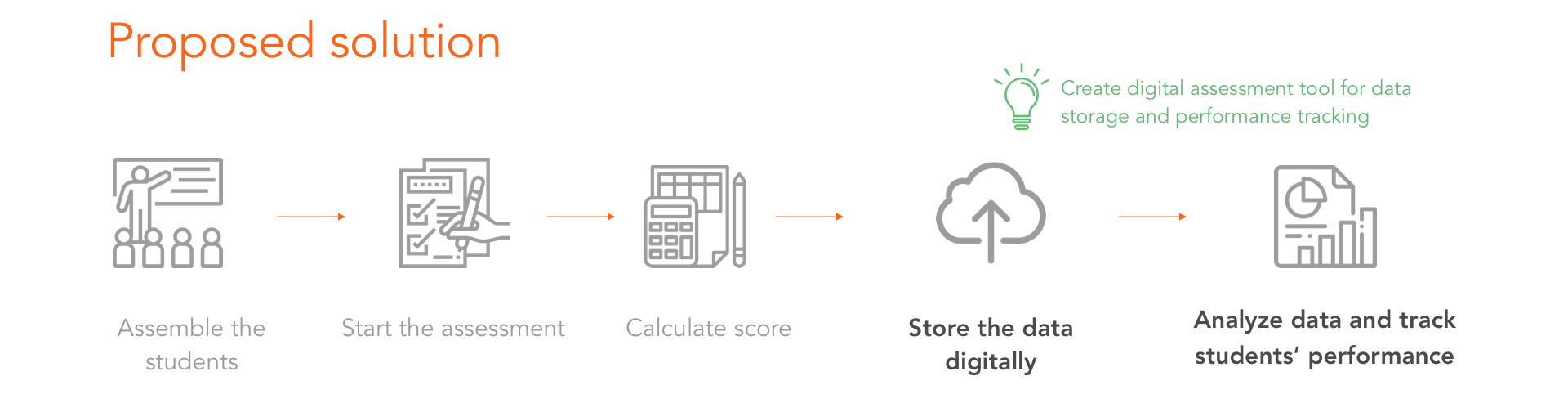

This is the current process of assessing students for instructors, from gathering students, starting assessment, generating reports to storing data.

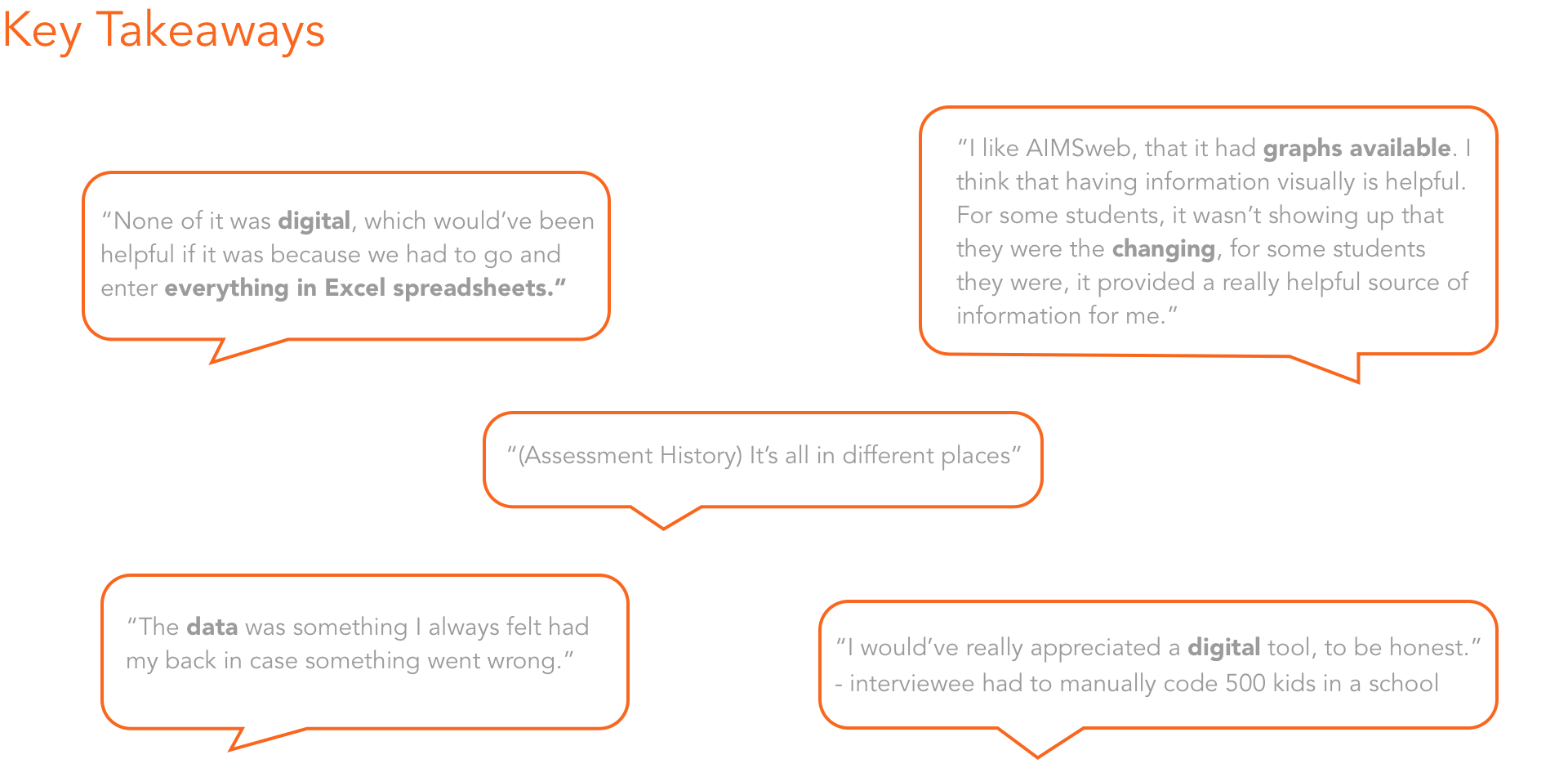

I found instructors are struggling with storing data in a systematic way and tracking students' performance.

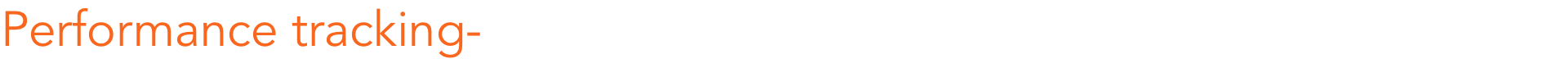

After I discovered the pain points and proposed solutions, I discussed possible changes on current prototype with designer.

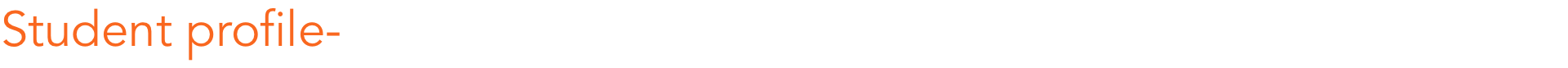

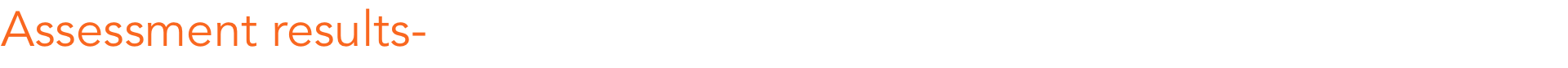

Here are some example pages that we made.